DeepSeek has recently unveiled its latest advancement in AI modeling: DeepSeek-V2.5. This new release merges the capabilities of its previous models, DeepSeek-V2-0628 and DeepSeek-Coder-V2-0724, into a unified architecture that enhances both general language understanding and coding proficiency.

DeepSeek-V2.5: A Unified AI Model

DeepSeek-V2.5 integrates the strengths of its predecessors, offering a comprehensive solution for a wide range of applications. Key features include:

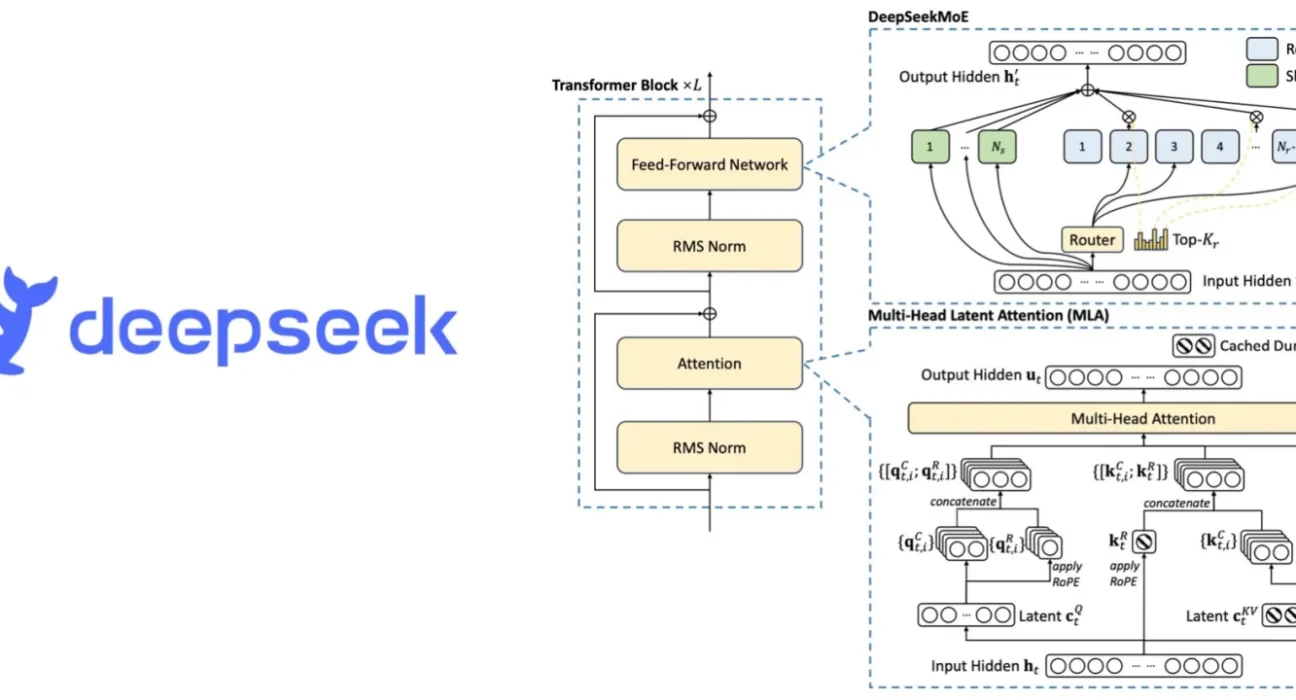

- Mixture-of-Experts (MoE) Architecture: With 238 billion parameters and 160 active experts, the model efficiently handles diverse tasks by activating only a subset of experts per input, optimizing resource utilization.

- Enhanced General and Coding Capabilities: The model demonstrates significant improvements in general language understanding and coding tasks, outperforming previous versions on various benchmarks.

- Extended Context Length: Supporting up to 128K tokens, DeepSeek-V2.5 can process and generate longer and more complex inputs, beneficial for tasks requiring extensive context.

Performance Benchmarks

DeepSeek-V2.5 has shown notable advancements in several benchmark evaluations:

Open-Source Accessibility

DeepSeek-V2.5 is available as an open-source model on Hugging Face under a variation of the MIT License. This allows developers and organizations to utilize the model freely, with certain restrictions to prevent misuse.

Practical Applications

The advancements in DeepSeek-V2.5 make it suitable for a variety of applications:

- Conversational AI: Enhanced dialogue capabilities enable more natural and context-aware interactions.

- Code Assistance: Improved code generation and debugging support developers in creating and maintaining software efficiently.

- Content Generation: The model’s proficiency in language tasks aids in generating high-quality written content.

- Data Analysis: Extended context handling allows for processing and analyzing large datasets effectively.

DeepSeek-V2.5 represents a significant step forward in AI model development, combining advanced capabilities with open accessibility. Its performance improvements and versatile applications position it as a valuable tool for developers and organizations seeking to leverage AI in various domains.

Key Features of DeepSeek-V2.5

- Mixture-of-Experts (MoE) Architecture

DeepSeek-V2.5 utilizes a 238 billion parameter model with 160 experts, activating only 24 experts per input. This makes it highly efficient and capable of adapting to a wide range of user tasks. - Strong General and Coding Abilities

The model shows marked improvement across both natural language and programming tasks, providing high performance on industry benchmarks. - Extended Context Length (128K tokens)

With the ability to handle long input sequences, it supports complex workflows in content generation, code completion, and large-scale data analysis.

Performance Highlights

- HumanEval (Code Generation): 90.2% pass rate

- LiveCodeBench (Real-Time Coding): Improved from 29.2% to 34.38%

- MATH-500 (Math Reasoning): Increased from 74.8% to 82.8%

- Safety Score: Achieved 82.6%, with spillover risks lowered to 4.6%

These numbers highlight DeepSeek-V2.5’s reliability and robustness across different AI evaluation categories.

Open-Source Commitment

DeepSeek-V2.5 is available as an open-source model on Hugging Face under a modified MIT license. This ensures accessibility to the AI community while including usage limitations to prevent misuse or abuse.

Applications and Use Cases

DeepSeek-V2.5 is designed for real-world utility, with wide applicability across:

- Conversational AI: Enhanced dialogue capabilities for customer support, tutoring, or virtual agents.

- Code Assistance: Reliable code generation, debugging, and software suggestions for developers.

- Content Generation: High-quality article writing, storytelling, and summarization.

- Data Analytics: Interpretation and analysis of large, complex datasets.